Category: Jamf Pro

-

Unlocking Insights: An Introductory Guide to Integrating Jamf Pro and Microsoft Power BI for Powerful Reporting

A Mea Culpa of sorts… If you have been following our series on Jamf Pro and Power BI, you know that the goal was to create an automated method for getting data into Power BI. Or at least that is the underlying goal. As I finished writing Part 3, I immediately started assembling the bits…

-

Unlocking Insights: An Introductory Guide to Integrating Jamf Pro and Microsoft Power BI for Powerful Reporting

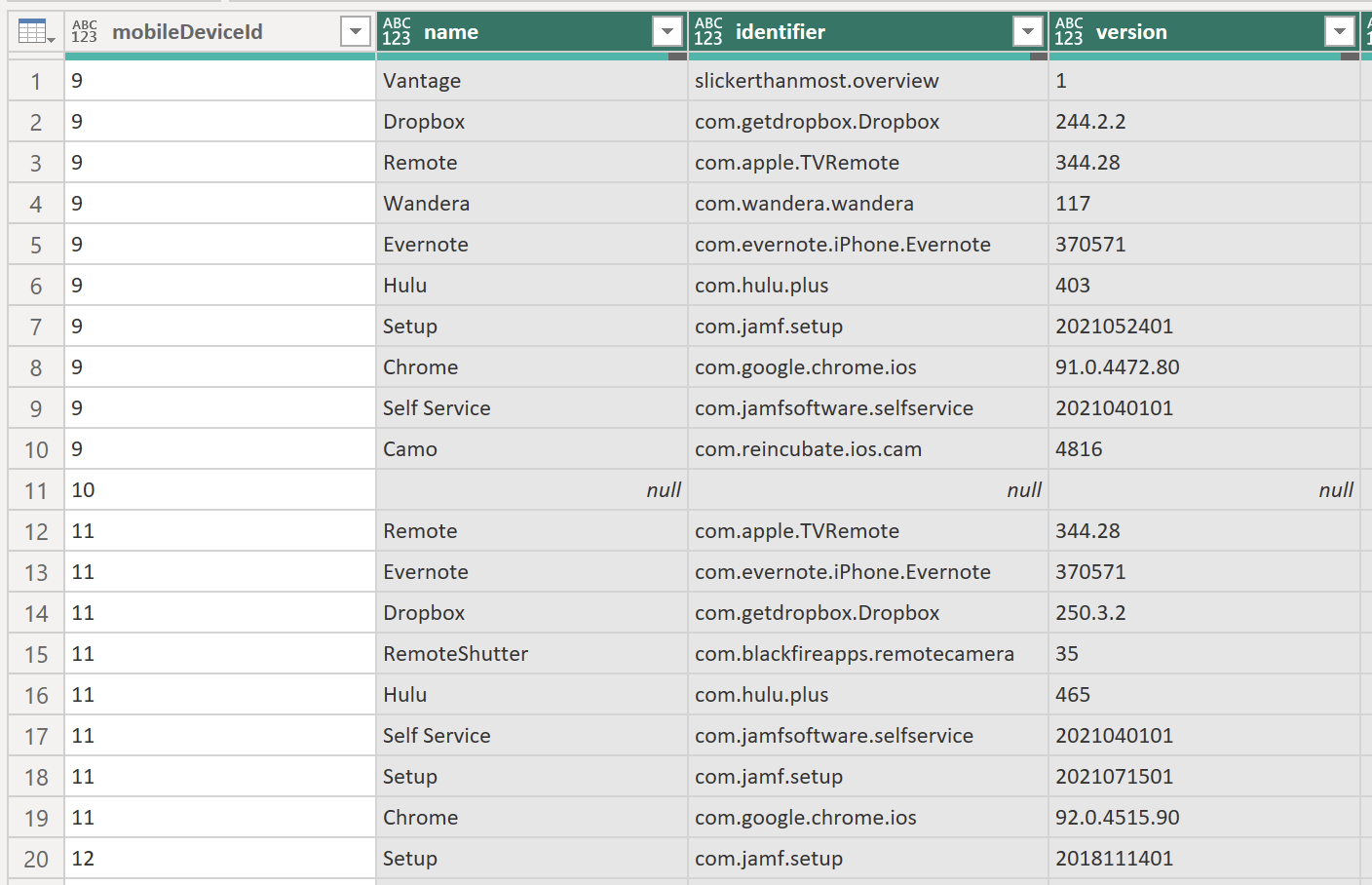

Part 3 – Getting Paginated Data from Jamf Pro In my previous posts, we covered how to set up Power BI and Jamf Pro to get ready to pull data and how to start pulling data into Power BI using API calls. In this post, I am going to go over how to utilize the…

-

Unlocking Insights: An Introductory Guide to Integrating Jamf Pro and Microsoft Power BI for Powerful Reporting

Part 2: Pulling Data from Jamf Pro In my last post, I walked you through creating an API Client in Jamf Pro and then using that information to configure Power BI to connect to Jamf Pro. In this post, we will configure Power BI to grab some information from Jamf Pro using API calls. But…

-

Unlocking Insights: An Introductory Guide to Integrating Jamf Pro and Microsoft Power BI for Powerful Reporting

Part 1: Jamf Pro & Power BI Setup Customers and prospects frequently inquire about Jamf Pro’s dashboard reporting capabilities, especially for executive-level presentations. Given the prevalence of Microsoft licensing in organizations, Power BI emerges as a popular choice for data analysis. However, integrating Jamf Pro with Power BI, particularly with evolving authorization methods, can pose…

-

The Impact of a Mentor

The period between Christmas and New Year serves as a moment of reflection. The author takes a nostalgic journey through the mentors who have influenced their life, ranging from parents to managers. Each of these figures has shared invaluable life lessons, contributing to the author’s personal and professional development. The author urges readers to acknowledge…

-

New Year, New Hosting

Happy (early) New Year! I hope everyone had a very Merry Christmas or Happy Hanukkah or whatever holiday you may have celebrated (or be celebrating). Over the holiday break I decided I wanted to change things up around here, so I decided to move my hosting over to Bluehost and freshen up the theme. I…

-

JAWA Adventures Part 2

In my previous post, Intro to JAWA – Your Automation Buddy, I went over what JAWA is, a little bit about why it was created, and some about how to get started with it. In this post we’ll dive into the problem I was recently trying to solve for one of my customers. But first,…

-

Intro to JAWA – Your Automation Buddy

Today I will start a new short series of posts around JAWA, the Jamf Automation + WebHook Assistant. This first post will be a little intro, along with setting up our story arc and the workflow idea I needed to solve. The What & The Why JAWA was born out of a need to provide…

-

Postman Advanced – Passing Data

In my previous posts about Postman I showed you how to setup Postman for working with Jamf Pro, how to create and update policies, how to gather our queries into collections, and much more. In this post I’m going to expand a little on our use of the Runner functionality which I covered in Part…